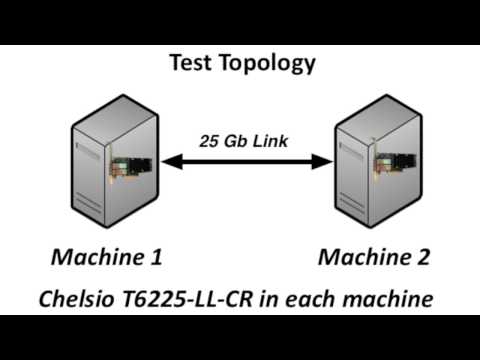

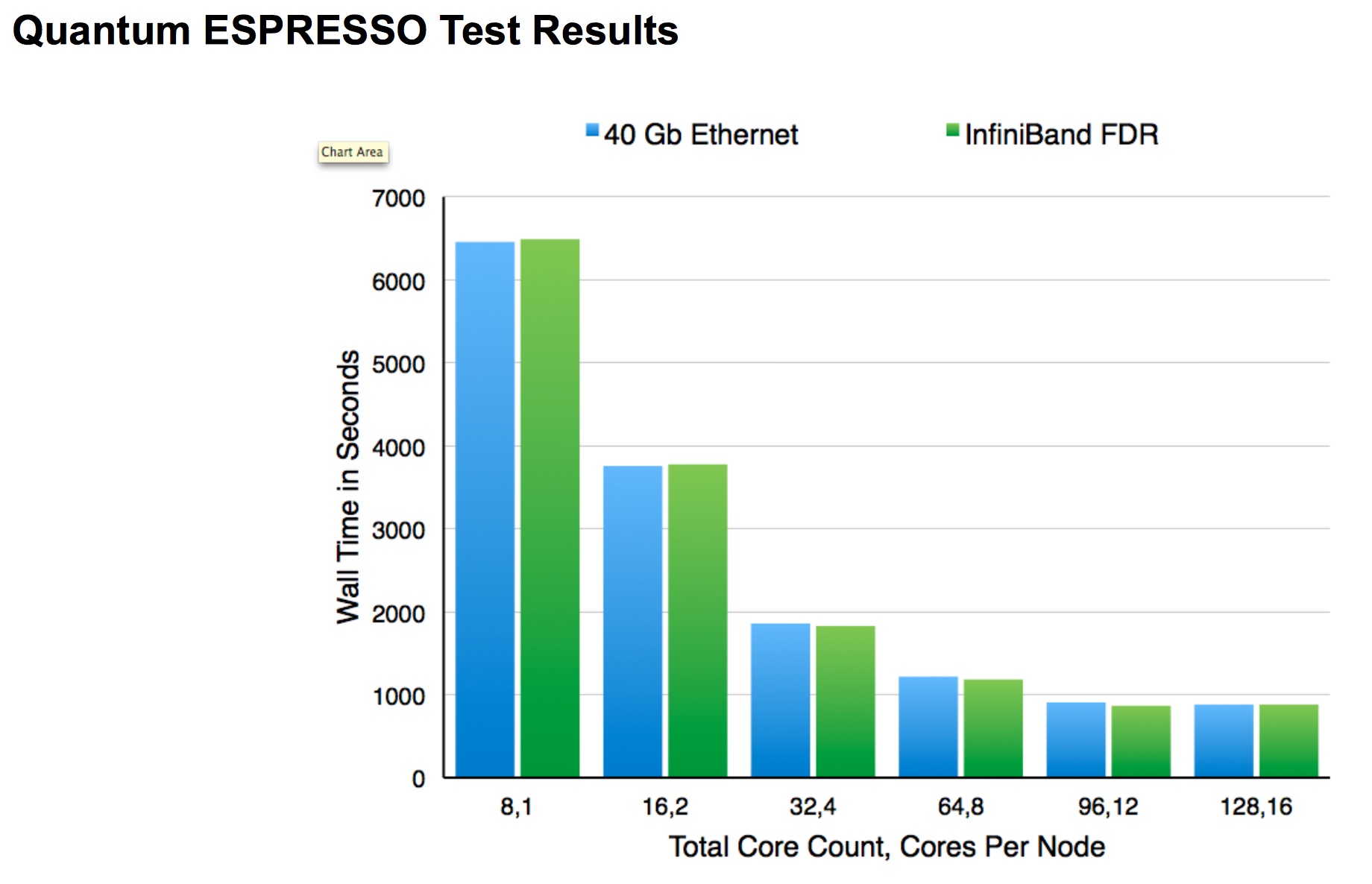

Chelsio’s Ethernet Solutions For Clusters and other High Performance Computing ApplicationsClusters are collection of computers configured to act as a single entity and used in solving complex performance, speed and availability problems. For a long time, clusters have primarily been used to attack large science-related problems. However, in the past few years, they have been applied to solve problems in various diverse sectors like finance, nanotechnology, datamining, etc. One unique feature of a cluster is its need for a high-speed interconnect solution used to move data between nodes as fast as possible. With earlier clusters, many used Ethernet as their interconnect because it was easy to use and ubiquitous. The Ethernet interconnect was fine in the beginning, but it quickly became apparent that the speed available on Ethernet at the time was not able to keep up with the required processing speed. As a result, other interconnects were developed. One of these was InfiniBand which brought Remote Direct Memory Access (RDMA) to clusters. RDMA was a significant development as it moved the responsibility of moving data between the nodes from the CPU to the network adapter. Therefore, CPUs could now be used to work on the problem that the cluster was intended to solve. InfiniBand was a much needed improvement to clustering, however it came at a large price. InfiniBand requires a whole separate network including adapters and switches to be added to the cluster. In addition to the hardware itself, it requires trained staff to manage the network. In recent years, another technology has come up in the name of Omni-Path. Omni-Path’s characteristics are similar to that of InfiniBand’s, including the limitations like requiring a total network infrastructure separate from the control networks, typically Ethernet-based, on the cluster. Since the development of InfiniBand, iWARP, also called RDMA over Ethernet, was developed by the Internet Engineering Task Force (IETF) and works with existing Ethernet switches and routers. In the past, InfiniBand held a significant lead in speed and latency over Ethernet, however that has changed. Now with the introduction of 100Gb Ethernet, the speed/latency difference has pretty much disappeared. Because of this, Ethernet is now considered a viable solution for new clusters, especially since iWARP can be used with common Ethernet switches and does not require any special features such as DCB or PFC. In fact, a full 41% of the top 500 supercomputers use Ethernet (10G or 1G) as their interconnect technology (source: top500.org). In most cases, if not all, the same High Performance Computing applications will run on an iWARP cluster with no changes. This has been documented in a joint white paper done by IBM and Chelsio. In this paper, the LAMMPS, WRF and Quantum Expresso Applications were tested on Chelsio’s 40G iWARP and Mellanox’s 56G InfiniBand ConnectX-3 Adapters. Comparing the performance results, it was concluded that Chelsio’s Plug-n-Play iWARP RDMA solution enabled the applications to run at equivalent performance levels.

With the Chelsio adapter’s ability for RDMA, Chelsio also provides GPUDirect RDMA for HPC applications. Using GPUDirect RDMA, NVIDIA GPUs resident in the cluster node can communicate directly over the iWARP interconnect to GPUs in other nodes. In addition to iWARP, InfiniBand and Omni-Path, there is another recent RDMA technology – RDMA over Converged Ethernet (RoCE). RoCE comes in at least two flavors – RoCE v1 and RoCE v2. RoCE v1 is an Ethernet link layer protocol whereas RoCE v2 is a newer version that runs on top of either UDP/IPv4 or UDP/IPv6. This provides the routability that RoCE v1 lacked. However, in both cases, RoCE requires complicated flow control for it to work correctly. This means one cannot use generic Ethernet switches, and the switches that need to be used are very complicated to setup. It can be said that RoCE is still evolving, as new versions keep being developed as deficiencies are identified. iWARP does not suffer from this constant re-engineering since it runs on top of TCP/IP, and thus, inherits all the congestion control and resilient behavior that TCP/IP has provided for years. It is a tried and proven technology that just works. With its TCP Offload Engine (TOE), Chelsio increases the performance of TCP/IP immensely by running the TCP/IP stack on-chip, thereby eliminating a lot of the processing previously performed on the host. Chelsio’s new sixth generation Terminator 6 (T6) Unified Wire adapters, enable a unified fabric over a single wire by simultaneously running all unmodified IP sockets, and other RDMA applications over Ethernet at line-rate. Designed specifically for virtualized datacenters, cloud service installations and HPC environments, the adapters bring a new level of performance metrics and functional capabilities to the networking space. For HPC cluster applications, the adapters reduce RDMA verbs latency from about 7µs in T3 to about 1µs, providing similar application performance as other RDMA technologies at a much lower cost. Summary

There are a wide range of high performance computing applications, including:

iWARP frees up memory bandwidth and valuable CPU cycles to allow focused processing of HPC applications, by taking advantage of an embedded TCP offload engine (TOE) and RDMA capabilities for direct application access and kernel bypass. With a number of fabrics converging towards Ethernet as a unified wire, the technology stands out in providing the lowest cost clustering solution by allowing users to leverage their pre-installed Ethernet networks in the datacenter.

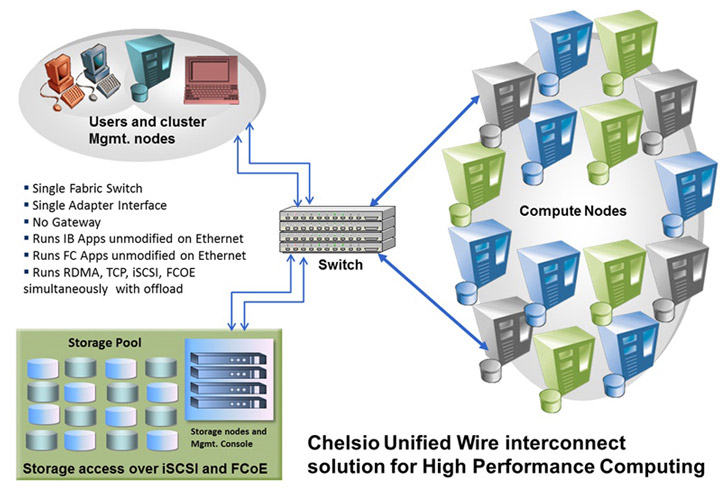

Chelsio delivers the optimal Ethernet interconnect solution for all High Performance Computing environments. As the diagram above shows, Chelsio Unified Wire adapters can run iWARP RDMA, TCP, and iSCSI simultaneously with full offload and deliver full wire speed throughput and extremely low latency between the computing nodes, the storage resources, and the user and cluster management nodes in any HPC environment. |

Resources

- The Chelsio Terminator 6 ASIC (White Paper)

- Low Latency Performance at 100GbE (Benchmark Report)

- Industry’s First 100G Offload with FreeBSD (Benchmark Report)

- Meeting Today’s Data Center Challenges (Chelsio, HPCwire, Tabor Report)

- Introducing NVMe over 100GbE iWARP Fabrics (Benchmark Report)

- Chelsio Precision Time Protocol (PTP) (White Paper)

- Resilient RoCE: Misconceptions vs. Reality (White Paper)

- InfiniBand’s Fifteen Minutes (White Paper)

- 40Gb Ethernet: A Competitive Alternative to InfiniBand (IBM Benchmark Report)